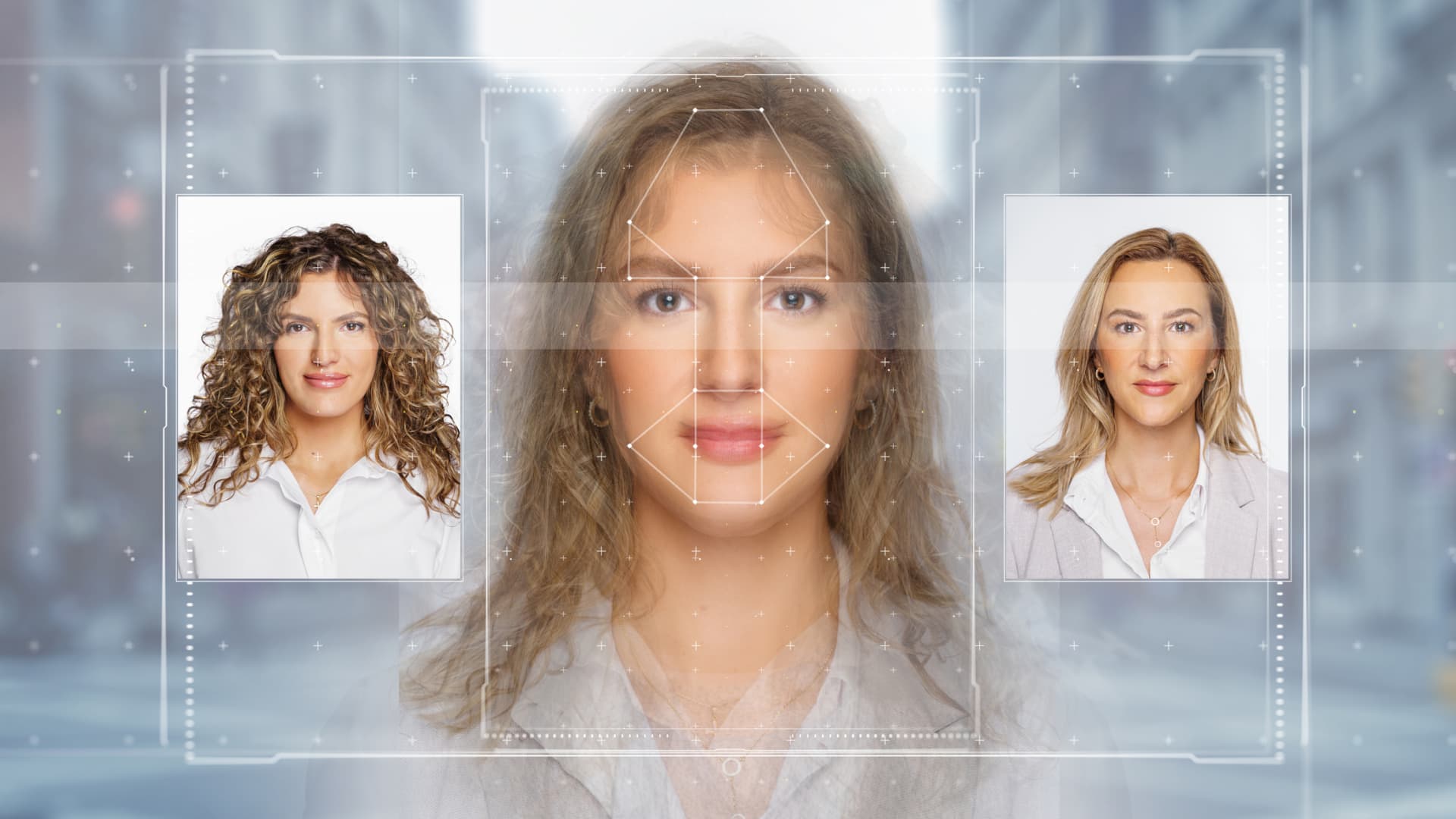

2024 is ready as much as be the largest international election 12 months in historical past. It coincides with the fast rise in deepfakes. In APAC alone, there was a surge in deepfakes by 1530% from 2022 to 2023, in accordance with a Sumsub report.

Fotografielink | Istock | Getty Pictures

Forward of the Indonesian elections on Feb. 14, a video of late Indonesian president Suharto advocating for the political social gathering he as soon as presided over went viral.

The AI-generated deepfake video that cloned his face and voice racked up 4.7 million views on X alone.

This was not a one-off incident.

In Pakistan, a deepfake of former prime minister Imran Khan emerged across the nationwide elections, saying his social gathering was boycotting them. In the meantime, within the U.S., New Hampshire voters heard a deepfake of President Joe Biden’s asking them to not vote within the presidential main.

Deepfakes of politicians have gotten more and more widespread, particularly with 2024 set as much as be the largest international election 12 months in historical past.

Reportedly, at the least 60 international locations and greater than 4 billion folks might be voting for his or her leaders and representatives this 12 months, which makes deepfakes a matter of significant concern.

In response to a Sumsub report in November, the variety of deepfakes the world over rose by 10 occasions from 2022 to 2023. In APAC alone, deepfakes surged by 1,530% throughout the identical interval.

On-line media, together with social platforms and digital promoting, noticed the largest rise in identification fraud charge at 274% between 2021 and 2023. Skilled companies, healthcare, transportation and video gaming had been had been additionally amongst industries impacted by identification fraud.

Asia shouldn’t be able to sort out deepfakes in elections when it comes to regulation, know-how, and schooling, stated Simon Chesterman, senior director of AI governance at AI Singapore.

In its 2024 International Risk Report, cybersecurity agency Crowdstrike reported that with the variety of elections scheduled this 12 months, nation-state actors together with from China, Russia and Iran are extremely more likely to conduct misinformation or disinformation campaigns to sow disruption.

“The extra severe interventions could be if a significant energy decides they wish to disrupt a rustic’s election — that is in all probability going to be extra impactful than political events enjoying round on the margins,” stated Chesterman.

Though a number of governments have instruments (to forestall on-line falsehoods), the priority is the genie might be out of the bottle earlier than there’s time to push it again in.

Simon Chesterman

Senior director AI Singapore

Nevertheless, most deepfakes will nonetheless be generated by actors throughout the respective international locations, he stated.

Carol Quickly, principal analysis fellow and head of the society and tradition division on the Institute of Coverage Research in Singapore, stated home actors might embody opposition events and political opponents or excessive proper wingers and left wingers.

Deepfake risks

On the minimal, deepfakes pollute the data ecosystem and make it tougher for folks to search out correct data or kind knowledgeable opinions a few social gathering or candidate, stated Quickly.

Voters may additionally be postpone by a specific candidate in the event that they see content material a few scandalous challenge that goes viral earlier than it is debunked as faux, Chesterman stated. “Though a number of governments have instruments (to forestall on-line falsehoods), the priority is the genie might be out of the bottle earlier than there’s time to push it again in.”

“We noticed how shortly X may very well be taken over by the deep faux pornography involving Taylor Swift — these items can unfold extremely shortly,” he stated, including that regulation is usually not sufficient and extremely onerous to implement. “It is typically too little too late.”

Adam Meyers, head of counter adversary operations at CrowdStrike, stated that deepfakes may additionally invoke affirmation bias in folks: “Even when they know of their coronary heart it is not true, if it is the message they need and one thing they wish to consider in they don’t seem to be going to let that go.”

Chesterman additionally stated that faux footage which exhibits misconduct throughout an election similar to poll stuffing, might trigger folks to lose religion within the validity of an election.

On the flip aspect, candidates might deny the reality about themselves which may be unfavorable or unflattering and attribute that to deepfakes as an alternative, Quickly stated.

Who ought to be accountable?

There’s a realization now that extra accountability must be taken on by social media platforms due to the quasi-public position they play, stated Chesterman.

In February, 20 main tech corporations, together with Microsoft, Meta, Google, Amazon, IBM in addition to Synthetic intelligence startup OpenAI and social media corporations similar to Snap, TikTok and X introduced a joint dedication to fight the misleading use of AI in elections this 12 months.

The tech accord signed is a crucial first step, stated Quickly, however its effectiveness will rely on implementation and enforcement. With tech corporations adopting completely different measures throughout their platforms, a multi-prong method is required, she stated.

Tech corporations may even need to be very clear concerning the varieties of selections which can be made, for instance, the sorts of processes which can be put in place, Quickly added.

However Chesterman stated it’s also unreasonable to count on personal corporations to hold out what are basically public capabilities. Deciding what content material to permit on social media is a tough name to make, and firms might take months to resolve, he stated.

“We must always not simply be counting on the nice intentions of those corporations,” Chesterman added. “That is why rules have to be established and expectations have to be set for these corporations.”

In the direction of this finish, Coalition for Content material Provenance and Authenticity (C2PA), a non-profit, has launched digital credentials for content material, which can present viewers verified data such because the creator’s data, the place and when it was created, in addition to whether or not generative AI was used to create the fabric.

C2PA member corporations embody Adobe, Microsoft, Google and Intel.

OpenAI has introduced will probably be implementing C2PA content material credentials to photographs created with its DALL·E 3 providing early this 12 months.

“I believe it’d be horrible if I stated, ‘Oh yeah, I’m not anxious. I really feel nice.’ Like, we’re gonna have to look at this comparatively intently this 12 months [with] tremendous tight monitoring [and] tremendous tight suggestions.”

In a Bloomberg Home interview on the World Financial Discussion board in January, OpenAI founder and CEO Sam Altman stated the corporate was “fairly targeted” on making certain its know-how wasn’t getting used to control elections.

“I believe our position may be very completely different than the position of a distribution platform” like a social media web site or information writer, he stated. “We now have to work with them, so it is such as you generate right here and also you distribute right here. And there must be a superb dialog between them.”

Meyers urged making a bipartisan, non-profit technical entity with the only mission of analyzing and figuring out deepfakes.

“The general public can then ship them content material they think is manipulated,” he stated. “It isn’t foolproof however at the least there’s some type of mechanism folks can depend on.”

However finally, whereas know-how is a part of the answer, a big a part of it comes all the way down to customers, who’re nonetheless not prepared, stated Chesterman.

Quickly additionally highlighted the significance of teaching the general public.

“We have to proceed outreach and engagement efforts to intensify the sense of vigilance and consciousness when the general public comes throughout data,” she stated.

The general public must be extra vigilant; in addition to truth checking when one thing is extremely suspicious, customers additionally have to truth verify essential items of knowledge particularly earlier than sharing it with others, she stated.

“There’s one thing for everybody to do,” Quickly stated. “It is all fingers on deck.”

— CNBC’s MacKenzie Sigalos and Ryan Browne contributed to this report.